Technology and Listening to Music

Apple’s iPod came out two decades ago and changed how we listen to music. Where are we headed now?

Stuart James, Edith Cowan University

On October 23, 2001, Apple released the iPod — a portable media player that promised to overshadow the clunky design and low storage capacity of MP3 players introduced in the mid-1990s.

The iPod boasted the ability to “hold 1,000 songs in your pocket”. Its personalized listening format revolutionized the way we consume music. And with more than 400 million units sold since its release, there’s no doubt it was a success.

Yet, two decades later, the digital music landscape continues to rapidly evolve.

A market success

The iPod expanded listening beyond the constraints of the home stereo system, allowing the user to plug into not only their headphones, but also their car radio, their computer at work, or their hi-fi system at home. It made it easier to entwine these disparate spaces into a single personalised soundtrack throughout the day.

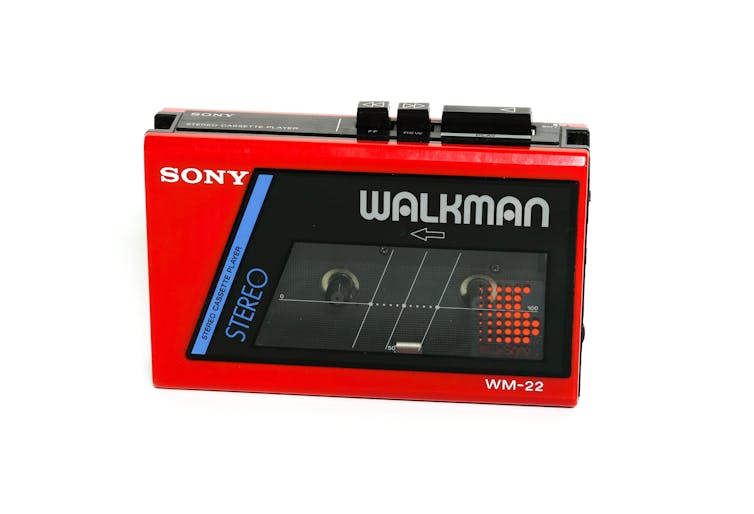

There were several preconditions that led to the iPod’s success. For one, it contributed to the end of an era in which people listened to relatively fixed music collections, such as mixtapes, or albums in their running order. The iPod (and MP3 players more generally) normalised having random collections of individual tracks.

Shutterstock

Then during the 1990s, an MP3 encoding algorithm developed at the Fraunhofer Institute in Germany allowed unprecedented audio data compression ratios. In simple terms, this made music files much smaller than before, hugely increasing the quantity of music that could be stored on a device.

Then came peer-to-peer file-sharing services such as Napster, Limewire and BitTorrent, released in 1999, 2000 and 2001, respectively. These furthered the democratisation of the internet for the end user (with Napster garnering 80 million users in three years). The result was a fast-changing digital landscape where music piracy ran rife.

The accessibility of music significantly changed the relationship between listener and musician. In 2003, Apple responded to the music piracy crisis by launching its iTunes store, creating an attractive model for copyright-protected content.

Meanwhile, the iPod continued to sell, year after year. It was designed to do one thing, and did it well. But this would change around 2007 with the release of the touchscreen iPhone and Android smartphones.

Computer in your pocket

The rise of touchscreen smartphones ultimately led to the iPod’s downfall. Interestingly, the music app on the original iPhone was called “iPod”.

The iPod’s functions were essentially reappropriated and absorbed into the iPhone. The iPhone was a flexible and multifunctional device: an iPod, a phone and an internet communicator all in one — a computer in your pocket.

And by making the development tools for their products freely available, Apple and Google allowed third-party developers to create apps for their new platforms in the thousands.

It was a game-changer for the mobile industry. And the future line of tablets, such as Apple’s iPad released in 2010, continued this trend. In 2011, iPhone sales overtook the iPod, and in 2014 the iPod Classic was discontinued.

Unlike the Apple Watch, which serves as a companion to smartphones, single-purpose devices such as the iPod Classic are now seen as antiquated and obsolete.

Music streaming and the role of the web

As of this year, mobile devices are responsible for 54.8% of web traffic worldwide. And while music piracy still exists, its influence has been significantly reduced by the arrival of streaming services such as Spotify and YouTube.

These platforms have had a profound effect on how we engage with music as active and passive listeners. Spotify supports an online community-based approach to music sharing, with curated playlists.

Based on our listening habits, it uses our activity data and a range of machine-learning techniques to generate automatic recommendations for us. Both Spotify and YouTube have also embraced sponsored content, which boosts the visibility of certain labels and artists.

And while we may want to bypass popular music recommendations — especially to support new generations of musicians who lack visibility — the reality is we’re faced with a quantity of music we can’t possibly contend with. As of February this year, more than 60,000 tracks were being uploaded to Spotify each day.

Shutterstock

What’s next?

The experience of listening to music will become increasingly immersive with time, and we’ll only find more ways to seamlessly integrate it into our lives. Some signs of this include:

- Gen Z’s growing obsession with platforms such as TikTok, which is a huge promotional tool for artists lucky enough to have their track attached to a viral trend

- new interactive tools for music exploration, such as Radio Garden (which lets you tune into radio stations from across the globe), the Eternal Jukebox for Spotify and Instrudive

- the use of wearables, such as Bose’s audio sunglasses and bone-conduction headphones, which allow you to listen to music while interacting with the world rather than being closed off, and

- the surge in virtual music performances during the COVID pandemic, which suggests virtual reality, augmented reality and mixed reality will become increasingly accepted as spaces for experiencing music performances.

The industry is also increasingly adopting immersive audio. Apple has incorporated Dolby Atmos 3D spatial audio into both its Logic Pro music production software and music on the iTunes store. With spatial audio capabilities, the listener can experience surround sound with the convenience of portable headphones.

As for algorithms, we can assume more sophisticated machine learning will emerge. In the future, it may recommend music based on our feelings. For example, MoodPlay is a music recommendation system that lets users explore music through mood-based filtering.

Some advanced listening devices even adapt to our physiology. The Australian-designed Nura headphones can pick up information about how a specific listener’s ears respond to different sound frequencies. They purport to automatically adjust the sound to perfectly suit that listener.

Such technologies are taking “personalised listening” to a whole new level, and advances in this space are set to continue. If the digital music landscape has changed so rapidly within the past 20 years, we can only assume it will continue to change over the next two decades, too.![]()

Stuart James, Lecturer and Research Scholar in Composition and Music Technology, Edith Cowan University

This article is republished from The Conversation under a Creative Commons license. Read the original article.